Meltem Ballan: What is a 5GW Data Center for AI?

In the news we heard about Sam Altman’s proposal to government for building multiple large-scale data centers with significant power requirements to support the growing computational needs of AI. There is no sustainable source or a single place on the earth to build large data centers at this stage. This requires diligence, analysis and also being open to innovation. Here are some points that I summarized for my readers to think about:

Impossibility of Building Large-Scale Data Centers Single-Handedly

Let’s forget about anything else; but, it is impossible to build a 5 GW data center single handedly. The idea of building multiple 5GW data centers is beyond the capacity of a single company or individual, no matter how well-resourced. For comparison, 5GW of power is enough to power New York (only in the summer need jumps to 10 GW), and building infrastructure to handle such energy demands requires coordination with utility companies, local governments, and federal agencies.

The scale of OpenAI’s proposal implies that the U.S. government and other institutional players would have to be heavily involved to provide the necessary regulatory support, land allocation, energy sourcing, and technology infrastructure. No single entity could achieve this alone, as it requires a network of stakeholders from energy companies, environmental regulators, and industry experts.

Environmental Impact Even with Green and Nuclear Energy Sources

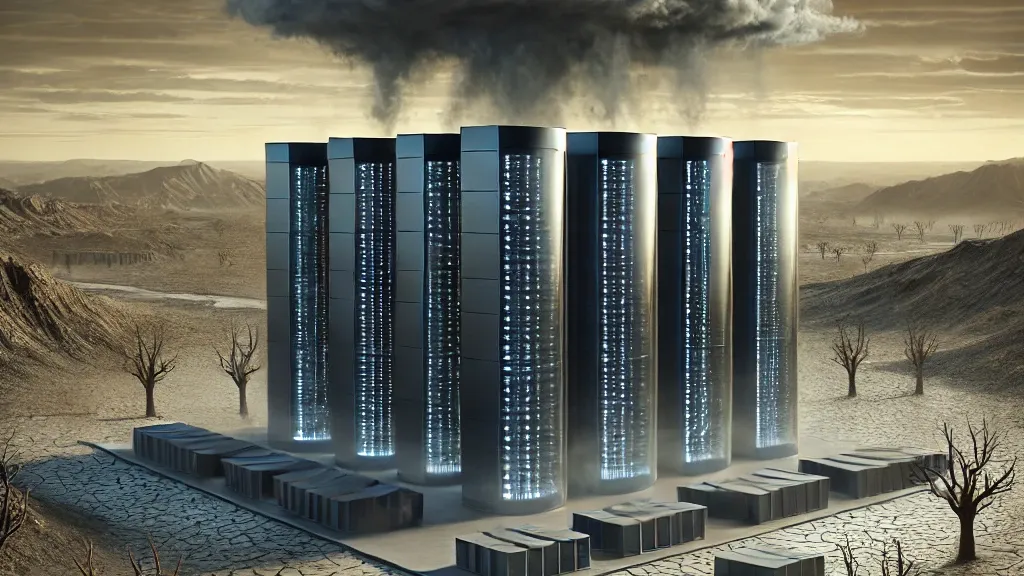

The carbon print of a data center at this scale is unbearable for the environment even if we were to use green sources. A data center requiring 5GW of energy will have a massive carbon footprint unless fully powered by green energy sources. However, even renewable energy sources (solar, wind) come with environmental costs, such as land use, material production, and intermittency issues that require energy storage solutions.

While nuclear power offers a low-carbon energy source, it is not without its challenges, including public opposition, high upfront costs, and long build times. The environmental benefits of nuclear power (reduced carbon emissions) still do not account for potential nuclear waste or the impact of water usage for cooling reactors.

Beyond energy consumption, the materials needed to build and maintain these massive data centers (metals, rare earth minerals, concrete, etc.) have significant environmental impacts. Moreover, electronic waste from outdated servers contributes to long-term ecological damage, even if the data centers are powered by clean energy.

The solution is not black and white and distributed micro centers might fill the gap.

Rather than concentrating such immense resources in a few mega data centers, a distributed approach would provide resilience in case of localized outages or environmental disasters. This approach could also minimize energy transmission losses and reduce the environmental burden on any single location.

Distributed centers can leverage local green energy sources (solar, wind, hydro) and avoid relying on a single large-scale energy source like nuclear or fossil fuels. This allows for better integration into regional energy grids and the use of renewable energy in a more adaptable manner.

Smaller data centers distributed across various climates could take advantage of natural cooling solutions, further reducing the energy required for artificial cooling systems. For instance, data centers in cooler climates can use outside air for cooling rather than energy-intensive air conditioning.

It is also important to highlight that distributed data centers will bring a lot of opportunities to local communities like high speed internet and fiber services. Without the telecommunication services the distributed centers will have latency issues and broadband providers will have a big role in this.

Unfortunately the news release by Sam Altman talks about data center operation itself as a commodity; however, industry needs to get innovative.

Data centers are often seen as interchangeable commodities focused on scale and cost-efficiency, but this overlooks the real challenges of innovation in areas like power efficiency, cooling, and security.

More over there are AI-Specific challenges that requires innovation and use-case based studies with enterprise users. The needs of AI models like those developed by OpenAI are pushing the boundaries of what data centers can handle. Innovations in hardware (e.g., specialized AI chips, cooling technologies) and software (e.g., distributed computing frameworks) are critical to meet these new demands. This is not just about building bigger data centers but smarter ones that can optimize performance for AI workloads.

The large-scale nature of these projects necessitates government involvement. Investments in data infrastructure should not be concentrated in a few companies. Democratizing access to data centers through government support and incentives could ensure a broader, more competitive landscape. This can fuel innovation, especially for smaller companies or academic institutions that can’t afford to build their own facilities but need advanced computational resources.

Let’s dig deeper into what innovation and government support mean. Government support through public-private partnerships is essential for the success of data centers, especially when considering green or nuclear energy solutions and provide incentives for small and new players in the field. OpenAI’s pitch to the White House highlights the need for government backing to tackle the enormous energy and regulatory hurdles; however, it doesn’t address the need for challengers and in this economy the need of institutional and governmental support for challengers.

The sustainable and green solutions are not just conversations or there is no quick fix. Investments in more energy-efficient technologies, cooling systems, and data center management software are necessary to keep up with the ever-growing demand for AI compute power. Government subsidies and incentives could accelerate innovation in these areas.

A decentralized network of data centers, potentially funded through government grants or incentives, would not only spread out the energy demand but also promote innovation across a wider range of geographical areas. This could democratize access to advanced AI resources, allowing smaller organizations and local governments to build or access smaller, specialized data centers.

All in all, while OpenAI’s vision for large-scale 5GW data centers reflects the increasing computational needs of AI, the environmental and logistical challenges of building such facilities are immense. Even with green or nuclear energy, the environmental impact remains significant. A distributed approach to data centers, coupled with innovation in infrastructure, energy management, and cooling, would be a more resilient, sustainable, and scalable solution. Moreover, the perception of data centers as mere commodities is outdated; innovation is critical to handling the demands of AI and High-Performance Computing (HPC). Government support and investment democratization are essential to foster this innovation and ensure that these advancements are accessible to more than just a few large players.

We took the challenge and started Concrete Engine against all odds to democratize computation and AI. Now we are ready to turn to you and let you know that we already build a strong network within Purdue University and got support from institutions like Evolve Holding. We are still looking for partners, use cases to work together and investors to part of future of computation and AI.